In law enforcement, it is extremely important to identify persons of interest quickly. In most cases, this is accomplished by showing a picture of the person to multiple law enforcement officers in hopes that someone knows the person. In Washington County, Oregon, there are nearly 20,000 different bookings (when a person is processed into the jail) every year. As time passes, officers’ memories of individual bookings fade. Also, in most cases, investigations move very quickly. Waiting for an officer to come on duty to identify a picture might mean missing the opportunity to solve the case.

In this post, I discuss our decision to use AWS for facial recognition. I walk through setting up web and mobile applications using AWS, demonstrating how easy it is even for someone who is new to AWS. I then show how we used Amazon Rekognition to build a powerful tool for solving crimes.

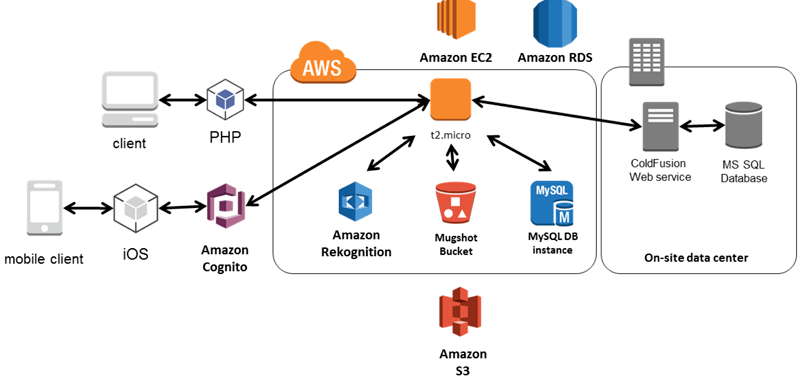

The following diagram shows the system architecture:

Setup

When we were presented with the problem of quickly identifying persons of interest, we thought it seemed like something we could automate instead of resorting to the usual manual processes. We wanted to be able to not only get responses back to the officers within seconds, but also to ensure that officers’ memory wasn’t going to be a limiting factor.

This is where we turned to AWS and Amazon Rekognition. We had not used AWS, but we had read a release announcement about Amazon Rekognition a few days prior to being approached about fixing the identification process. We thought this would be a great product to test.

Setup was fairly straightforward. In the Washington County jail management system (JMS), we have an archive of mugshots going back to 2001. We needed to get the mugshots (all 300,000 of them) into Amazon S3. Then we need to index them all in Amazon Rekognition, which took about 3 days.

Our JMS allows us to tag the shots with the following information: front view or side view, scars, marks, or tattoos. We only wanted the front view, so we used those tags to get a list of just those.

Uploading to S3 was easy. At first, we simply created the bucket and manually used the web interface to upload approximately 1,000 images at a time. While this took a while, it didn’t take a lot of our time because we could set it and forget it.

Implementation (here be code)

Later, we used a script to upload the images. We used PHP to move the files from our JMS servers and process them onto the web server we are using for AWS. On the server, we use the following code to place the images in S3:

S3 makes it simple to create the files in the system.

After the 300,000 images were uploaded into Amazon S3, we then needed to index all of the images. In hindsight, we realized that it would have been easier to index them in the same script that I used to upload them to S3. This would have eliminated the need to validate which images had already been indexed.

To index the faces, we simply looped through every image in the bucket:

Again, for having no experience with AWS, we found this extremely easy, and it and worked very well. You do need to use the ExternalImageId property so that you know what Amazon Rekognition returns when you do a face search. Without that, you have no back reference to the S3 object.

After all of the images were indexed, we worked on a quick front end that would let me search the collection for matches when we got a new image. A simple form to a PHP script provided that front end.

When an image was submitted through the form, we searched using a simple script:

With the results in the $results[] array, I used the ExternalImageId and was able to display the S3 image:

We also used the ExternalImageId to query our database for information about the booking. We accomplished this with a simple AJAX call to the web service we set up on our JMS server.

After setting up, we were ready to test the tool. The best way to test would be to run surveillance and other images of known suspects from solved cases and evaluate the accuracy of the results. But we didn’t want to taint the results because we already knew who the suspects were. So we had a detective send 20 random pictures of individuals whose identity he knew, but we didn’t. We ran all of them through the system, and reviewed the results to find the face that we thought matched the best. We sent the results to the detective to see how it went. 75% of the results accurately identified the person in the photo.

After testing was done, we wanted to put the power of the application into the hands of the officers. We did that by creating a mobile application.

Again, we created a simple UI for capturing an image and then processing it with Amazon Rekognition. The code for searching faces is fairly straightforward:

After we made both the mobile application and the web application available, we started seeing results. For example, we caught a suspect based on an image taken with a camera at a self-checkout kiosk at a big box hardware store.

Early in 2017, an unknown suspect visited a hardware store, filled a basket with expensive items, and scanned them at the self-checkout kiosk. Before finishing the checkout process, the suspect picked up the merchandise and walked out of the store. The checkout kiosk’s camera captured a great shot of him.

Typically, this would initiate a manual process where we show the image to multiple law enforcement officers and hope that someone recognizes the suspect.

This time, we ran the image through our facial recognition system and got four hits with more than 80% similarity according to Amazon Rekognition. We noticed that one of the men looked very familiar to us. We gave his name to the detective in charge of the investigation. The detective did a quick search of Facebook and found a picture of him. In that picture, we noticed many facial similarities. The best part? He was wearing the same hoodie as the man captured on camera who was suspected of the theft.

In another example, a surveillance camera captured the image of a man using a credit card that was later reported as stolen. Because of the low resolution and high angle of the image, it was difficult to determine who it was just by looking at the image. When we ran it through Amazon Rekognition, we received a result that was greater than a 95% match.

In a final example, we were searching for a person of interest who was posting photos on Facebook under a pseudonym. Due to some of the posts he was authoring, a local law enforcement agency needed to identify and speak with him. His profile picture showed him laying on a bed covered in dollar bills. We used this image to search our mugshots and found a close to 100% match. We identified the individual. Officers were able to discuss the post with him and ensure that he and the public were safe.

Conclusion

In this post, I showed how to use Amazon Rekognition for facial recognition. I also showed how easy it is to design and implement a facial recognition system in AWS. Amazon Rekognition has become a powerful tool for identifying suspects for my agency. As the service improves, I hope to make it the standard for facial recognition in law enforcement.